Background:

Risk stratification is critical for balancing the potential benefit and harm of allogeneic hematopoietic stem cell transplantation (HSCT). However, our ability to do so is suboptimal and better markers are warranted. Lactate dehydrogenase (LDH) is a readily available biomarker with a well-established prognostic role in the front-line therapy setting of hematological malignancies. Few reports in selected diseases have studied the utility of LDH as a surrogate for pre-transplant risk-stratification. We, therefore, sought to systematically study the prognostic role of LDH in patients with various hematological malignancies undergoing allogeneic - HSCT.

Methods:

This was a retrospective study on a cohort of patients who underwent allogeneic-HSCT at a single center between 2000 and 2016. LDH levels in the day before conditioning initiation were extracted from the electronic medical record, as well as features related to patients, disease, donor, and transplant. The primary outcome was overall survival (OS), and secondary outcomes included non-relapse mortality (NRM) and relapse (considered as competing events). The median follow-up time was 59 months (IOR 27 - 112). Using Kaplan-Meier estimates or cumulative incidence curves for competing events, we analyzed the association between median LDH levels, per disease category, with the detailed outcomes. Associations meeting a p-value below 0.05 for the primary outcome were further explored in a multivariable Cox regression (cause-specific Cox for competing events). The model was adjusted for age, disease risk index (DRI), year of HSCT, donor type, and conditioning regimens.

Results

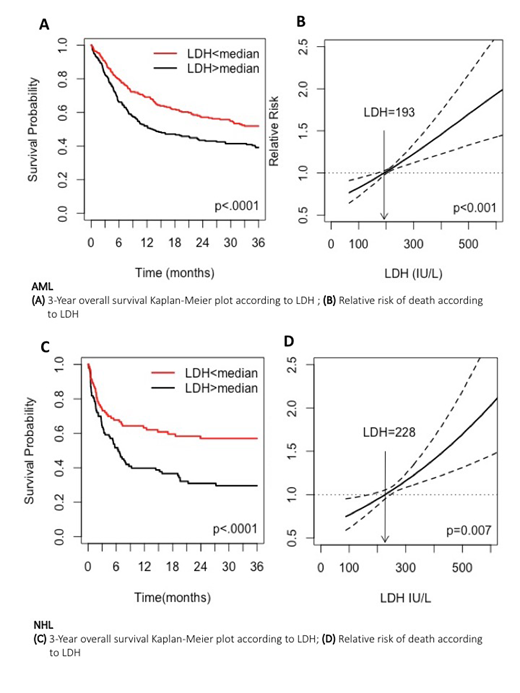

A total of 1,250 adult (median age 52.8 years) patients were included in this study. Acute myeloid leukemia (AML) was the leading transplantation induction (n=594; 48%), followed by non-Hodgkin lymphoma (NHL, 211, 16.9%), acute lymphoblastic leukemia (ALL, n=175, 14%), myelodysplastic syndrome (MDS, n=165, 13%), and plasma cell dyscrasia (PCD, n=105, 8%). The majority of patients had intermediate-risk disease (44%), sibling or match unrelated donor (51% and 31% respectively) and received myeloablative or reduced-toxicity conditioning regimens (27% and 36%, respectively). In the univariate analysis, AML patients with higher than median LDH levels (>193 IU/L) had lower 3-year OS (51.9% vs. 39.2%, p<0.001) (Fig.1A), and higher cumulative incidence of NRM (15.7% vs. 19.6%, p<0.04), and relapse incidence (RI) (26.7% vs. 40.7%, p<.0001). We constructed a cubic spline (Fig.1B), which showed that the relationship between increasing LDH and reduced OS was linear (p<0.001). Higher LDH maintained its prognostic capacity in the multivariable Cox model (OS: hazard ratio [HR] 1.34 95% CI[1.06-1.7], RI :[HR] 1.42 95% CI[1.07-1.89]) though not in NRM: [HR] 1.31 95% CI [0.87-1.98] .In a univariate analysis of NHL patients, LDH levels above median value (>228 IU/L) was associated decreased OS (51.9% vs. 39.2%, p<.0001) (Fig.1C), and increased RI (17.1% vs. 29.2%., p=0.02), but not NRM. Using a cubic spline (Fig.1D), we showed that the association between LDH and mortality is also linear (p=0.007). LDH did not meet the criteria for statistical significance for all studied outcomes in ALL, MDS, and PCD.

Conclusion

Using a systematic approach, we show that pre-transplantation LDH is a potent biomarker that could be used for risk stratification for AML and NHL patients who are candidates for allogeneic-HSCT. While further validation is warranted it may be rewarding to incorporate LDH in future prognostic models assessing the risk of transplantation.

No relevant conflicts of interest to declare.

Author notes

Asterisk with author names denotes non-ASH members.

This feature is available to Subscribers Only

Sign In or Create an Account Close Modal