Abstract

Background: Detection of measurable residual disease (MRD) at the time of allogeneic hematopoietic cell transplantation (HCT) has been associated with increased risk of relapse and death in patients with acute myeloid leukemia (AML). However, there is considerable heterogeneity in the methodology of MRD detection and subsequent data analysis. We performed a systematic review and meta-analysis of studies evaluating the prognostic role of MRD detected by polymerase chain reaction (PCR)- or multiparametric flow cytometry (MFC) in patients with AML (acute promyelocytic leukemia excluded) at the time of HCT.

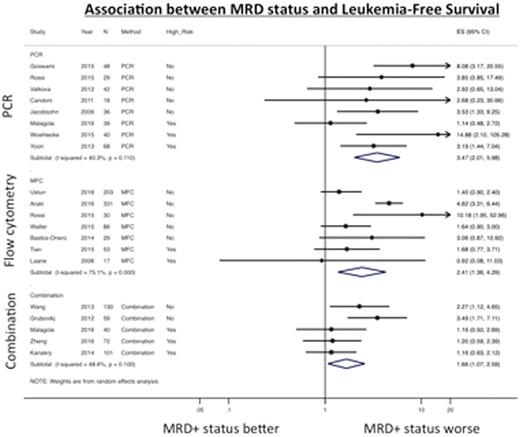

Methods: We searched PubMED and EMBASE for articles published between 1/2005 and 6/2016 with at least 15 patients. In addition to patient and disease characteristics, we extracted hazard ratios (HR) for leukemia-free survival (LFS; primary outcome), as well as overall survival (OS), cumulative incidence of relapse (CIR), and non-relapse mortality (NRM). Where HRs were not reported, they were estimated based on Kaplan-Meier curves (using Enguage Digitizer, version 4.1) or, alternatively, based on point-estimates for survival assuming an exponential decay model. We used STATA version 14 to calculate pooled HRs and 95% confidence intervals (CIs) by log-transforming individual study results and performing a frequentist random effects analysis. Between-study heterogeneity was quantified with the I2 statistic. Risk of bias was assessed using a modified Quality in Prognostic Studies instrument.

Results: We identified 20 studies with complete data in the published literature. In addition, we obtained supplemental data from one published study via personal communication with the author allowing for its inclusion. In all, 1,539 patients were included. MRD was measured by PCR for WT1 (n=7 studies) or WT1 plus other genes (n=1), by MFC looking for leukemia-associated immunophenotypes (n=8) or a "different from normal" phenotype (n=3), or by combination methods (n=5); some studies reported results from more than one method. Eight studies were found to have a high risk of bias: in 6 cases, this was due to concerns with MRD detection technique (MRD measured >60 days before transplant, detection methods unlikely to yield a sensitivity ²0.1%, details of detection method unclear). Across all studies, MRD+ status was associated with worse LFS (HR 2.48 [1.77-3.46], I2=65.6%), OS (HR 2.27 [1.72-2.98], I2=54.5%) and CIR (HR 3.64 [2.34-5.67], I2=58.0%), but not NRM (HR 1.11 [0.78-1.58], I2=0%). These associations were seen regardless of method of detection used, patient age, conditioning intensity, or cytogenetic risk. PCR-based methods were associated with less inter-study heterogeneity and more precise estimates of LFS than MFC-based or combination methods (Figure 1a). After removing studies with a high risk of bias (Figure 1b), studies using PCR-based methods and combination methods were still less heterogeneous than those using MFC-based methods (LFS: HR 4.59 [2.64-7.98], I2=0.0% and 2.81 [1.70-4.66], I2=0.0% vs. 2.77 [1.39-5.50], I2=81.5%). A similar trend was evident for OS outcomes but not for CIR, for which heterogeneity was considerably less and most studies showed positive outcomes.

Conclusion: MRD+ status prior to HCT is associated with an increased risk of relapse and shorter LFS and OS, whereas there is no association with NRM. We found significantly more heterogeneity in survival risk estimates in studies using MFC-based detection, perhaps as a reflection of challenges related to performing MFC compared to the relatively more standardized PCR.

Othus:Glycomimetics: Consultancy; Celgene: Consultancy. Hourigan:Sellas Life Sciences: Consultancy.

Author notes

Asterisk with author names denotes non-ASH members.

This feature is available to Subscribers Only

Sign In or Create an Account Close Modal