Abstract

Background: Allogeneic hematopoietic stem cell transplantation (allo-HSCT) has been shown to increase survival and induce cure of acute leukemia (AL). Unfortunately, transplant related mortality (TRM) remains high. Risk scores, based on a conventional statistical approach, have been developed for TRM prediction. These have been well validated. Nevertheless, predictive performance is sub-optimal; thus, limiting clinical utility. Factors impeding prediction might be attributed to the statistical methodology, number and quality of features collected, or simply the size of the population analyzed. We set to explore these factors, using a novel computational approach, based on machine learning algorithms (ML).

ML is a subfield of computer science and artificial intelligence that deals with the construction and study of systems that can learn from data, rather than follow only explicitly programmed instructions. Commonly applied in complex data scenarios, such as financial and technological settings, it may be suitable for outcome prediction if the field of HSCT.

Study design: Using a cohort of 28,236 adult allo-HSCT recipients from the ALWP registry of the EBMT, transplanted between 2000-2011, owing to Acute Myeloid Leukemia or Acute Lymphoblastic Leukemia, and containing 24 variables (i.e., patient, leukemia, donor, and transplant characteristics) we devised a two phase data mining study 1) Development of ML based prediction models for day 100 TRM; 2) In- silico analysis (i.e., performed through a computerized simulation) of the developed models. Factors necessary for optimal prediction were explored: type of model, size of data set, number of necessary variables, and performance in specific subpopulations; Model development and analysis were performed with "WEKA" a data mining suite. The area under the receiver operating characteristic curve (AUC) is a commonly used evaluation method for binary choice problems, which involve classifying an instance as either positive or negative. A perfect model will score an AUC of 1, while random guessing will score an AUC of around of 0.5. The AUC was used as measure of predictive performance for the developed models.

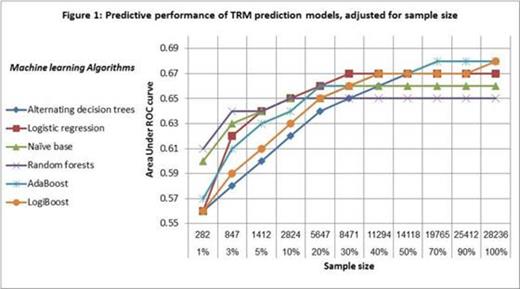

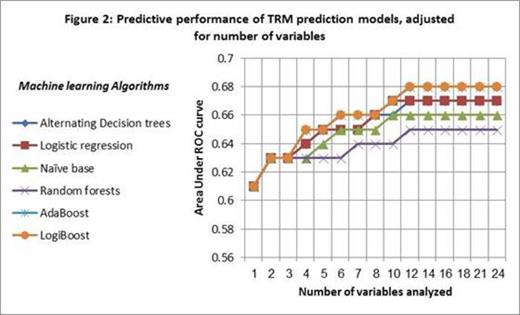

Results: We developed six machine learning based prediction models for TRM at day 100. Optimal AUCs ranged from 0.65-0.68. Predictive performance plateaued for a population size ranging from n=5647-8471, depending on the algorithm (Figure 1). A feature selection algorithm ranked variables according to importance. Provided with the ranked variable data, we discovered that a range of 6-12 ranked variables were necessary for optimal prediction, depending on the algorithm (Figure 2). Predictive performance of models developed for specific subpopulations, ranged from an average of 0.59 to 0.67 for patient in second complete remission and patients receiving reduced intensity conditioning respectively.

Conclusions: We present a novel computational approach for prediction model development and analysis in the field of HSCT. Using data commonly collected on transplant patients, our simulation elucidate outcome prediction limiting factors. Regardless of the methodology applied, predictive performance converged when sampling more than 5000 patients. Few variables (approximately 6-12), "carry the weight" with regard to predictive influence. In summary, the presented findings describe a phenomenon of predictive saturation, with data traditionally collected. Improving the current performance will likely require additional types of input like genetic, biologic and procedural factors.

No relevant conflicts of interest to declare.

Author notes

Asterisk with author names denotes non-ASH members.

This feature is available to Subscribers Only

Sign In or Create an Account Close Modal