In this issue of Blood, Pfeilstöcker et al reveal that our current major scoring systems for patients with myelodysplastic syndromes (MDSs) predict outcome better at diagnosis than at later times in the disease, a point that should be taken into consideration in decision making at follow-up.1

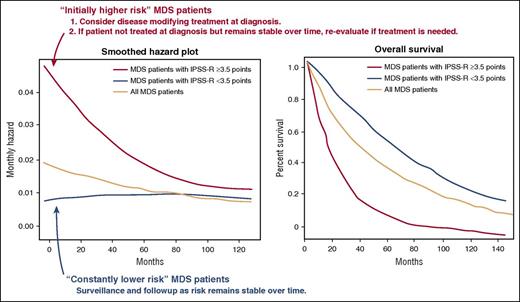

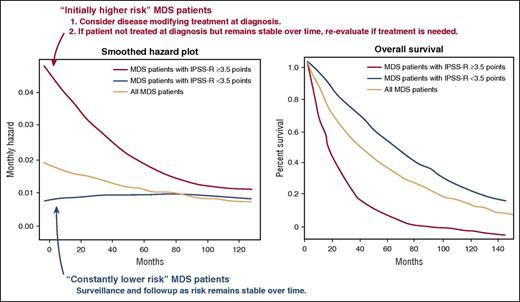

Changes in risk over time in patients with MDSs. Shown are smoothened hazard plots (left) and the Kaplan-Meier survival curve (right). Patients with an IPSS-R score of <3.5 at the time of MDS diagnosis are likely to have a continuously low risk of disease and may be thought of as having “constantly lower risk.” In contrast, patients with an IPSS of ≥3.5 have an initial high risk, but their risk of death due to leukemic transformation or progression of MDS declines over time. Thus, these patients may be considered as “initially higher risk.” Based on current recommendations, disease-modifying therapy should be offered to all patients with “initially higher-risk” disease at diagnosis if possible. If treatment is not possible at diagnosis, the patient’s clinical condition should be reassessed before offering treatment, however, as prognosis determined at the time of diagnosis may not be accurate at the time of follow-up. The figure has been adapted from Figure 5 in the article by Pfeilstöcker et al that begins on page 902.

Changes in risk over time in patients with MDSs. Shown are smoothened hazard plots (left) and the Kaplan-Meier survival curve (right). Patients with an IPSS-R score of <3.5 at the time of MDS diagnosis are likely to have a continuously low risk of disease and may be thought of as having “constantly lower risk.” In contrast, patients with an IPSS of ≥3.5 have an initial high risk, but their risk of death due to leukemic transformation or progression of MDS declines over time. Thus, these patients may be considered as “initially higher risk.” Based on current recommendations, disease-modifying therapy should be offered to all patients with “initially higher-risk” disease at diagnosis if possible. If treatment is not possible at diagnosis, the patient’s clinical condition should be reassessed before offering treatment, however, as prognosis determined at the time of diagnosis may not be accurate at the time of follow-up. The figure has been adapted from Figure 5 in the article by Pfeilstöcker et al that begins on page 902.

Natural courses of MDSs differ among subgroups, and stratification of MDS patients is therefore essential for decision making at diagnosis and at each time of follow-up. Although multiple MDS prognostic scoring systems have been developed, each of which stratify MDS patients very well at diagnosis,2-5 it had not been clarified if these scoring systems can be applied at later time points or not. This multicenter retrospective study of 7212 untreated MDS patients clearly shows that there is a decrease in risk of mortality and leukemic transformation over time from diagnosis in higher-risk but not in lower-risk patients. According to this observation, the authors classified patients with MDS into “initially high-risk” patients and “constant lower-risk” patients, a distinction most reliably made through use of a Revised International Prognostic Scoring System (IPSS-R) score of 3.5 as a cutoff (see figure).

As patients in each subgroup of MDSs have different courses of disease, there might be a limitation in a prognostic scoring system designed based on proportional hazards models to be applied to risk evaluation at later time points after diagnosis, because these models assume that effects of covariates are not time dependent. The current study accurately identifies this problem, demonstrating that the effects of covariates can vary over time in at least “initially high-risk” patients. One of the major prognostic scoring systems for MDSs that takes into account both prognostic factors at clinical onset and their changes over time is the World Health Organization classification-based prognostic scoring system (WPSS).5 Malcovati et al retrospectively analyzed untreated MDS patient data to establish a dynamic prognostic scoring system that provides an accurate prediction of overall survival and risk of transformation in MDS patients at any time during the course of their disease. Valuable data of repeated measures, including bone marrow evaluations, from 464 MDS patients during follow-up enabled testing of the prognostic value of the WPSS by applying Cox regression with time-dependent covariates. As a result, the time-dependent WPSS was shown to be able to predict both overall survival and risk of transformation at later time points after diagnosis. However, Pfeilstöcker et al demonstrate that in high-risk or very high-risk patients classified even according to the WPSS criteria, hazards regarding mortality and leukemic transformation diminish over time. This discrepancy may possibly be attributed to the size of the cohorts, retrospective nature of the studies (most of the repeated bone marrow and cytogenetic evaluations in the WPSS cohort may have been performed when patients showed some hints of disease progression), and natural behavior of MDS itself. As MDS patients in high-risk or very high-risk categories have high probabilities of transformation and death within 1 to 2 years after diagnosis, sample sizes reduce following initial diagnosis, potentially explaining why a proportional hazards model is not necessarily suitable for prognostication in MDS.

Based on this study, we should be careful when we apply most of the current prognostic scoring systems to MDS patients in clinical follow-up, especially to higher-risk patients. This study reveals that there are potentially 2 prognostic subgroups in patients who are classified as “initially high risk” at diagnosis: (1) “truly high-risk” patients who will develop acute myelogenous leukemia (AML) in 1 to 2 years and (2) “relatively high-risk” patients who are not likely to develop AML. Although aggressive therapeutic interventions are generally considered for “initially high-risk” patients at diagnosis, this approach potentially overtreats the “relatively high-risk” group.

Following this study, efforts to establish or optimize prognostic scoring systems for patients both at diagnosis and at the time of follow-up are needed to predict prognosis more accurately. More specifically, there is a need to identify those patients in clinical follow-up who are at risk among the “constant lower-risk” group and those who are not at risk in the “initially high-risk” group. This may require a new study including treated patients given the importance of therapy in modifying risk. Moreover, future efforts to correlate mutational profiling at diagnosis and follow-up would clarify the genetic characteristics of “initially high-risk” patients and “constant lower-risk” patients in more detail. This may also help us understand what molecular characteristics confer the greatest and least great risk of death and transformation in MDS.

Conflict-of-interest disclosure: The authors declare no competing financial interests.