Key Points

American Society of Hematology has endorsed using risk assessment models (RAMs) to individualize VTE prophylaxis.

Fast-and-frugal decision tree based on IMPROVE RAMs reduces unnecessary VTE prophylaxis by 45% and saves ∼$5 million annually.

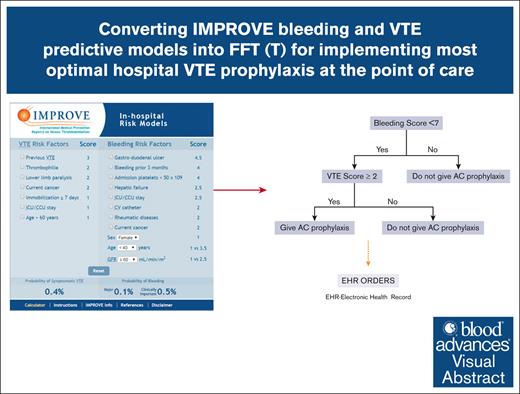

Visual Abstract

Current hospital venous thromboembolism (VTE) prophylaxis for medical patients is characterized by both underuse and overuse. The American Society of Hematology (ASH) has endorsed the use of risk assessment models (RAMs) as an approach to individualize VTE prophylaxis by balancing overuse (excessive risk of bleeding) and underuse (risk of avoidable VTE). ASH has endorsed IMPROVE (International Medical Prevention Registry on Venous Thromboembolism) risk assessment models, the only RAMs to assess short-term bleeding and VTE risk in acutely ill medical inpatients. ASH, however, notes that no RAMs have been thoroughly analyzed for their effect on patient outcomes. We aimed to validate the IMPROVE models and adapt them into a simple, fast-and-frugal (FFT) decision tree to evaluate the impact of VTE prevention on health outcomes and costs. We used 3 methods: the “best evidence” from ASH guidelines, a “learning health system paradigm” combining guideline and real-world data from the Medical University of South Carolina (MUSC), and a “real-world data” approach based solely on MUSC data retrospectively extracted from electronic records. We found that the most effective VTE prevention strategy used the FFT decision tree based on an IMPROVE VTE score of ≥2 or ≥4 and a bleeding score of <7. This method could prevent 45% of unnecessary treatments, saving ∼$5 million annually for patients such as the MUSC cohort. We recommend integrating IMPROVE models into hospital electronic medical records as a point-of-care tool, thereby enhancing VTE prevention in hospitalized medical patients.

Introduction

Fifteen years ago, the US Surgeon General issued a call to action to prevent deep venous thrombosis and pulmonary embolism (VTE) as one of the most serious but avoidable hospital complications.1 Since then, the focus has remained on reducing the underuse of VTE prophylaxis in hospitalized patients, resulting in “mandatory use of a standardized medical order set in which a prescription for prophylaxis is embedded.”2 Reducing the underuse of VTE prophylaxis has also been promoted as one of the critical quality measures by most national quality organizations in the United States.3 Increasingly, however, overuse is being recognized as a more significant problem. Studies in the last few years have demonstrated that 78% to 86% of medical hospitalized patients at low risk for VTE have received unnecessary pharmacologic VTE prophylaxis.4 As a result, there is increasing recognition that some patients at higher risk of VTE need hospital VTE prophylaxis, whereas others, considered to be at low risk of VTE, do not.5 Most recently, influential guideline panels, such as the American Society of Hematology (ASH), have endorsed the use of risk assessment models (RAMs) as an approach to individualizing VTE prophylaxis as a way of finding a balance between overuse (excessive risk of bleeding) and underuse (risk of avoidable VTE).6,7

ASH has also endorsed IMPROVE (International Medical Prevention Registry on Venous Thromboembolism) VTE RAM to guide VTE prophylaxis.8 IMPROVE is the only existing RAM for assessing both short-term bleeding (IMPROVE_bleeding)9 and VTE risk in acutely ill medical inpatients (IMPROVE_vte).8 It has been externally validated in US,10-12 Canadian,13 Chinese,14 and European populations15 with acceptable discrimination (area under the curve [AUC] = 0.65 to 0.77 [VTE score]; AUC = 0.63 to 0.76 [bleeding score]) and calibration properties.

Although RAMs can (accurately) assess the risk of bleeding and VTE, to date, their use has not been proven to aid decision-making and improve health outcomes. Additionally, no recommendations exist for integrating these 2 predictive models (ie, IMPROVE_bleeding and IMPROVE_vte) into 1 coherent management strategy. We previously demonstrated that this can only be accomplished within a decision-theoretical framework.16-18 One such framework consists of fast-and-frugal decision trees (FFTs), effective implementation of heuristics for problem-solving, and decision-making strategies composed of sequentially ordered cues (tests) and binary (yes/no) decisions formulated via a series of if-then statements reflective of the heuristic-analytic, adaptive theory of human reasoning that dominates clinical decision-making.17-21 Unlike the popular clinical pathways (algorithms), which are theory-free constructs, FFTs are based on solid theoretical foundations.20,21 The latter provides a basis for quantitative evaluation of the classification capacity of FFTs. Importantly, clinical algorithms can be easily converted into FFTs to facilitate quantitative assessment of their classification accuracy.17-21

In this article, we describe the conversion of IMPROVE bleeding and VTE predictive models to FFT to identify the optimal recommendation for VTE prophylaxis in hospitalized patients.

Methods

Practice guidelines for using IMPROVE RAMs

The original IMPROVE VTE risk article8 and ASH6,7 guidelines recommend VTE prophylaxis if the IMPROVE VTE risk score is ≥2. This converts into the probability of VTE between 1% and 1.5% in the original report.8 Some authors recommend an IMPROVE score of ≥4 to indicate thromboprophylaxis in hospitalized patients.22 The IMPROVE bleeding risk report9 and ASH6,7 guidelines recommend IMPROVE bleeding score of <7 and ≥7 as a low or high bleeding risk to proceed with or avoid VTE prophylaxis, respectively. According to the original publication,9 IMPROVE bleeding score <7 corresponds to 1.5% of major and clinically relevant bleeding. IMPROVE bleeding score ≥7 was observed in 7.9% of the patients who experienced major and clinically relevant bleeding.

Conversion of IMPROVE RAMs into FFT

The cutoffs identified for VTE and bleeding scores lend them naturally to integration into the FFT decision tree as a decision-support strategy. Figure 1 shows the FFT based on the integration of VTE and bleeding IMPROVE scores in the comprehensive risk-adapted VTE prophylaxis. As shown, we started with the “first, do no harm” decision principle: patients with a high bleeding score (≥7) should not be given prophylaxis (first cue in Figure 1). Those with bleeding scores <7 are then assessed for the risk of VTE. Then, if the IMPROVE VTE risk score is ≥2 or ≥4 (see “Results”), they will be given pharmacological VTE prophylaxis.

By categorizing the disease or making predictions, FFT also functions as a predictive model.21 This enables personalized forecasting of outcomes and tailored management based on a sequence of responses within the FFT decision tree, while considering the heterogeneity of patients' risk profiles.21,23,24

There are 2 variants of FFT: (a) standard versions of FFT, which aim to classify a condition of interest (ie, whether the patient has VTE or not) but do not take the consequences of treatment into account,17,19,21 (b) a variant of FFT referred to as FFT with threshold (FFTT),17,19,21 which incorporates benefits and harms of treatment at each exit of the tree to indicate whether treatment such as VTE prophylaxis is indicated depending on the risk for VTE or bleeding concerning the treatment threshold at a given cue.16,25,26 Below, we present the impact and classification accuracy of FFT/T-based management strategies (ie, overall accuracy, sensitivity, specificity, and positive and negative predictive values).

Using real-world data to collect data on IMPROVE RAMs

Patients were eligible for the analysis if they were aged ≥18 years and had ≥3 days of hospitalization for an acute medical illness consecutively admitted to the Medical University of South Carolina (MUSC) hospital in Charleston, SC. Patients were excluded if they received anticoagulants or thrombolytics at admission or within 48 hours after admission, if they had bleeding at admission, had major surgery or trauma within 3 months before admission, or were admitted for treatment of VTE. Admissions for major surgeries were also excluded and patients with primary obstetric or mental health diagnoses.

We extracted data on the key features of IMPROVE VTE and bleeding RAMs from MUSC electronic medical (EPIC)/discharge records. Supplemental Appendix 0 shows the ICD10 (International Classification of Diseases) and other codes used to select the variables of interest for the analysis. The outcomes of interest were VTE at 90 days and bleeding (major and clinically significant bleeding) at 14 days. Two reviewers manually verified all the outcomes (VTE and bleeding). The ascertainment of exposure (whether the patients received VTE prophylaxis) was conducted using 20% of randomly selected records. The Gwet agreement coefficient27 between the 2 observers was high; the accuracy for classification of VTE outcome was 0.95 (95% CI, 0.94-0.96) for VTE and 0.93 (95% CI, 0.92-0.94) for bleeding outcomes. The overall accuracy of ascertainment of the exposure was 96%.

Enoxaparin at the recommended prophylactic dose (40 mg daily or 30 mg twice a day, further adjusted for renal function as needed)28 was almost exclusively used in our patient cohort. Patients who received therapeutic/intermediate-intensity anticoagulation28 (supplemental Appendix 2, Table 1C) were excluded (n = 601). Patients who received aspirin (n = 132) were included in the prophylactic arm in light of the evidence that aspirin has prophylactic effects in a surgical setting.29 Patients who received antiplatelet combinations without aspirin, heparin flushes to keep the IV lines open, and thrombolytics (alteplase) for the treatment of thrombotically occluded catheters (n = 42) were included in the control arm (supplemental Appendix 2, Table 1B).

External validation of IMPROVE models

To assess the generalizability of the original IMPROVE models,8,9 we assessed their performance using the MUSC data. Both the original and external validations of IMPROVE models10-15 were performed agnostic of prophylactic treatments. The model performance was assessed by calculating the discrimination and calibration properties.30 Discrimination (capacity of the predictive model to discriminate between patients who truly have outcomes vs those who do not have an outcome of interest) was assessed using c-statistics. Calibration (capacity to determine the agreement between predicted and actually observed outcomes) was assessed using the Hosmer-Lemeshow goodness-of-fit statistic (H-L) and examining intercept, that is, whether the best-fit line of the model crossed the y-axis at zero predicted probability and whether the slope was statistically significantly different from 1. In the case of poor external validation of the model, we assessed the internal validity of IMPROVE models using MUSC data.

Impact analysis: comparison of various VTE prophylaxis strategies on health outcomes

To estimate the predictive impact of VTE prophylaxis using IMPROVE models, we first used the best evidence approach, relying on the effects of thromboprophylaxis as reported in the ASH guidelines.6,7 Specifically, we assumed that thromboprophylaxis with medications such as enoxaparin reduces the risk of VTE by 41% (RRR = 1 − RR = 1 − 0.59 = 41%), where RR refers to the relative risk and RRR refers to the relative risk reduction of VTE due to thromboprophylaxis compared with no treatment. The ASH estimated relative risk increase (RRI) of clinically significant bleeding to be 48% higher on thromboprophylaxis than in no treatment arms.6

We compared the effects of the following management strategies on the number of bleeding events (major and clinically significant) and VTE events (supplemental Appendix 1): (1) administer no VTE prophylaxis to medically hospitalized patients; (2) administer VTE prophylaxis to all hospitalized patients admitted for treatment medical disease (current default management strategy in most institutions); (3) administer VTE prophylaxis if VTE risk exceeds 1% (as per the original IMPROVE recommendations29); (4) administer VTE prophylaxis if VTE score is ≥2 (as per ASH guidelines6 and the original IMPROVE article8); (5) administer VTE prophylaxis if VTE score is ≥4 (as per ref# 22); (6) apply VTE prophylaxis if the bleeding score is <7 (as per IMPROVE bleeding score recommendation9); (7-10) administer VTE prophylaxis as per FFT/T (Figure 1) by assuming VTE risk ≥2 or ≥4, respectively.

According to equation 1, if the probability (risk) of VTE, we should give treatment; otherwise, we should not provide VTE prophylaxis.25,26,36,37 Note that according to classic statistical decision theory, when choosing between different options, the most rational choice is the one with the highest expected utility, regardless of statistical significance; the magnitude of differences is irrelevant.38

Because patients studied in clinical trials differ from “real-world” patients,39,40 a fundamental concept behind the “learning health system paradigm”41 is to evaluate the impact of the best available research evidence in a given population of interest. Therefore, we further extended the modeling of the impact of VTE prophylaxis on health outcomes by using distributions of prognostic scores from our MUSC population while assuming the applicability of the best available evidence as per ASH guidelines.6,7

Finally, we performed the same analysis using exclusively MUSC “real-world” data. From the data, we estimated RRRvte = 0.058 and RRIbleeding = 0.197; however, the results for RRR were not significant, potentially indicating a null effect of prophylaxis on VTE (see “Discussion”). All statistical analyses were performed using the statistical package Stata42 and reported according to Strengthening the Reporting of Observational Studies in Epidemiology (STROBE)43 and TRIPOD44 (Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis) guidelines.

Results

Figure 2 shows a STROBE flow diagram displaying the study patients’ enrollment, exclusion criteria, and data availability for the analysis according to anticoagulation treatment. From 1 January 2022 to 31 December 2022, 5051 consecutive patients were admitted to the MUSC hospital in Charleston, SC, of whom 2072 patients were medical patients eligible for the analysis per the criteria in the original IMPROVE reports.8,9 After further restriction to the observations within 14 days (bleeding) and 90 days (VTE) and exclusion of patients who received therapeutic/intermediate intensity anticoagulation,28 we ended up with 1429 analyzable patients for the impact analysis. The median length of stay was 5 days (range; 3-85 days). Note that we could not collect data on the “immobility >7 days” due to a lack of adequate ICD10 codes.

STROBE flow diagram displaying the study patients’ enrollment, exclusion criteria, and data availability for the analysis according to anticoagulation treatment.

STROBE flow diagram displaying the study patients’ enrollment, exclusion criteria, and data availability for the analysis according to anticoagulation treatment.

Tables 1 and 2 show the distribution of IMPROVE VTE and bleeding risk predictors in our population as per the intention-to-treat population. Not surprisingly, the case-mix differed between our and the original IMPROVE publications,8,9 primarily due to a higher number of patients requiring admission to the intensive care unit during the index hospitalization in our vs the original IMPROVE cohorts (30.2% vs 4.898 and 8.5%9, respectively). However, the risk score proportion distribution was similar despite dissimilarities in the predictors distribution (Table 3).

VTE baseline characteristics and outcomes

| Predictors N = 2072 . | % . |

|---|---|

| Age >60 | 56.2 |

| Current cancer | 16.8 |

| Previous VTE | 0.7 |

| ICU (yes) | 30.2 |

| Lower limb paralysis | 0.8 |

| Thrombophilia | 1.0 |

| Immobility >7 days | NA |

| VTE_Outcome (yes) | 2.2 |

| Predictors N = 2072 . | % . |

|---|---|

| Age >60 | 56.2 |

| Current cancer | 16.8 |

| Previous VTE | 0.7 |

| ICU (yes) | 30.2 |

| Lower limb paralysis | 0.8 |

| Thrombophilia | 1.0 |

| Immobility >7 days | NA |

| VTE_Outcome (yes) | 2.2 |

NA, not available.

Bleeding baseline characteristics and outcomes

| Predictors N = 2072 . | % . |

|---|---|

| GFR 30-59 | 14.4 |

| Male | 49.6 |

| Age 40-84 | 77.6 |

| Current cancer | 16.8 |

| Rheumatic diseases | 7.4 |

| Central venous catheter | 34.5 |

| ICU (yes) | 30.2 |

| Severe renal failure GFR <30 | 11.7 |

| Hepatic failure | 2.4 |

| Age >85 | 8.3 |

| Platelet count <50.102 cells/L | 0.9 |

| Bleeding before admission | 2.8 |

| Active gastro ulcer | 0.0 |

| Bleed_outcome (yes) | 1.6 |

| Predictors N = 2072 . | % . |

|---|---|

| GFR 30-59 | 14.4 |

| Male | 49.6 |

| Age 40-84 | 77.6 |

| Current cancer | 16.8 |

| Rheumatic diseases | 7.4 |

| Central venous catheter | 34.5 |

| ICU (yes) | 30.2 |

| Severe renal failure GFR <30 | 11.7 |

| Hepatic failure | 2.4 |

| Age >85 | 8.3 |

| Platelet count <50.102 cells/L | 0.9 |

| Bleeding before admission | 2.8 |

| Active gastro ulcer | 0.0 |

| Bleed_outcome (yes) | 1.6 |

GFR, glomerular filtration rate.

Distribution of IMPROVE risk scores used in ASH guidelines

| VTE . | ASH/original IMPROVE scores (%) . | MUSC . |

|---|---|---|

| 0 or 1 | 69 | 66 |

| 2 or 3 | 24 | 30 |

| ≥4 | 7 | 4 |

| Bleeding | ||

| <7 | 90 | 79 |

| ≥7 | 10 | 21 |

| VTE . | ASH/original IMPROVE scores (%) . | MUSC . |

|---|---|---|

| 0 or 1 | 69 | 66 |

| 2 or 3 | 24 | 30 |

| ≥4 | 7 | 4 |

| Bleeding | ||

| <7 | 90 | 79 |

| ≥7 | 10 | 21 |

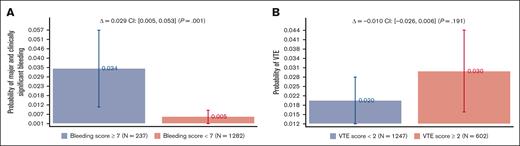

Figure 3 shows the bleeding and VTE rates as a function of the recommended discriminatory scores: <7 vs ≥7 for bleeding and <2 vs ≥2 VTE scores. Bleeding scores showed a statistically significant absolute risk difference of 2.9% (95% CI, 0.5%-5.3%; P = .001), whereas no statistical difference was noted for VTE scores (see “Discussion”). Supplemental Appendix 3 shows the analysis by VTE prophylaxis, which is consistent with what is observed in the original publications8,9 and other validation studies;10-15 treatment did not affect the IMPROVE prognostic scores.

Major and clinically significant bleeding and VTE rates as a function of the recommended IMPROVE models’ discriminatory scores: <7 vs ≥7 for bleeding and <2 vs ≥2 VTE scores (see Figure 1).

Major and clinically significant bleeding and VTE rates as a function of the recommended IMPROVE models’ discriminatory scores: <7 vs ≥7 for bleeding and <2 vs ≥2 VTE scores (see Figure 1).

Supplemental Appendix 4, Figure 1.1 shows the external validation of IMPROVE bleeding scores with discrimination of AUC = 0.799 (95% CI, 0.731-0.848), similar to that reported in the original IMPROVE report9 and other external validation studies.13-15 After updating the intercept,45,46 the model was well calibrated with a not significant H-L test (P = .287), with an intercept close to 0 and slope of 1.016, which was not statistically significantly different from 0 and 1, respectively. However, we could not externally validate the IMPROVE VTE risk model using the original IMPROVE scores. Nevertheless, using our data with the same predictors, we obtained similar discrimination (AUC = 0.704) as was reported in the original IMPROVE score8 and excellent calibration (H-L test: P = .927, with an intercept of 0 and slope = 1) (supplemental Appendix 4; Figure 1.2). Importantly, however, both VTE scores and particularly bleeding scores were almost perfectly discriminatory regarding the predicted risk above and below the scores used in FFT (Figure 4; and supplemental Appendix 3; Figure 1.3).

Relationship between IMPROVE VTE scores (A) and bleeding scores (B) and the probability of VTE and bleeding (major and clinically relevant).

Relationship between IMPROVE VTE scores (A) and bleeding scores (B) and the probability of VTE and bleeding (major and clinically relevant).

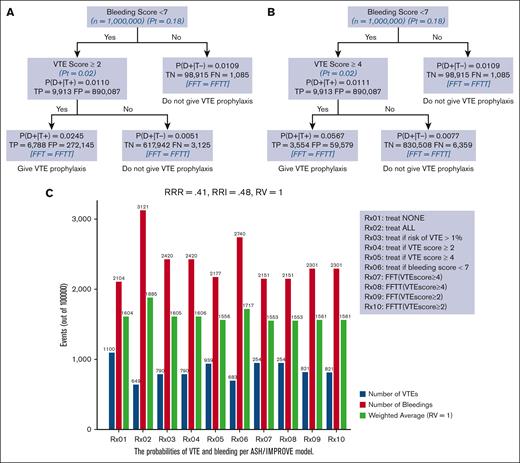

Figures 5A and B) show FFT and FFTT decision trees informed by IMPROVE risk assessment scores ≥2 (5A) and ≥4 (5B) and evidence-based ASH guidelines.6,7 The results were consistent with our hypothesis that the FFT(T)-based decision tree generated by linking VTE and bleeding IMPROVE scores (Figure 1) leads to optimal classification and decision making. We also determined that the tree was fast, reaching the decision in less than 2 steps, and frugal, using only ∼5% of all information. In this case, both FFT and FFTT had identical classifications, with an overall accuracy of 93% for VTE scores ≥4 (Figure 5B) and 72% for VTE scores ≥ 2 (Figure 5A), respectively. However, given the low prevalence of outcomes (Figure 3), as expected, the negative predictive value was much higher (>99% for both VTE scores) than the positive predictive value of 5.6% for FFT(T) using a VTE score ≥4 and 2.44% for a VTE score ≥ 2. Figure 5C shows an impact analysis comparing 10 different management strategies. The 2 FFT(T)-driven strategies (Rx07-Rx10) were the best, resulting in the lowest weighted average and optimal trade-offs between bleeding and VTE. All FFT(T) strategies outperformed other currently recommended managements. Strategy Rx2 (“treat all”) (presently recommended strategy) was the worst, yielding the highest number of weighted average outcomes driven by an increased number of bleedings, even though, as expected, that led to the lowest VTE event rates. The opposite was observed for the Rx1 strategy (“Treat none”), which was the second-worst management option. The changes in RV did not materially affect the results (supplemental Appendix 5).

An FFT and FFTT decision trees were informed by IMPROVE risk assessment scores ≥2 (A) and ≥4 (B) and evidence-based ASH guidelines; (C) impact on bleeding and VTE outcomes. The strategy with the lowest weighted average is considered the best. P(D+|T+)− probability of VTE if the test is “positive” that is, IMPROVE score exceeds given score (= PPV); P(D+|T−)− probability of VTE if the test is “negative” that is, IMPROVE Score <given score; TP, true positives; FP, false positive; TN, true negatives; FN, false negatives.

An FFT and FFTT decision trees were informed by IMPROVE risk assessment scores ≥2 (A) and ≥4 (B) and evidence-based ASH guidelines; (C) impact on bleeding and VTE outcomes. The strategy with the lowest weighted average is considered the best. P(D+|T+)− probability of VTE if the test is “positive” that is, IMPROVE score exceeds given score (= PPV); P(D+|T−)− probability of VTE if the test is “negative” that is, IMPROVE Score <given score; TP, true positives; FP, false positive; TN, true negatives; FN, false negatives.

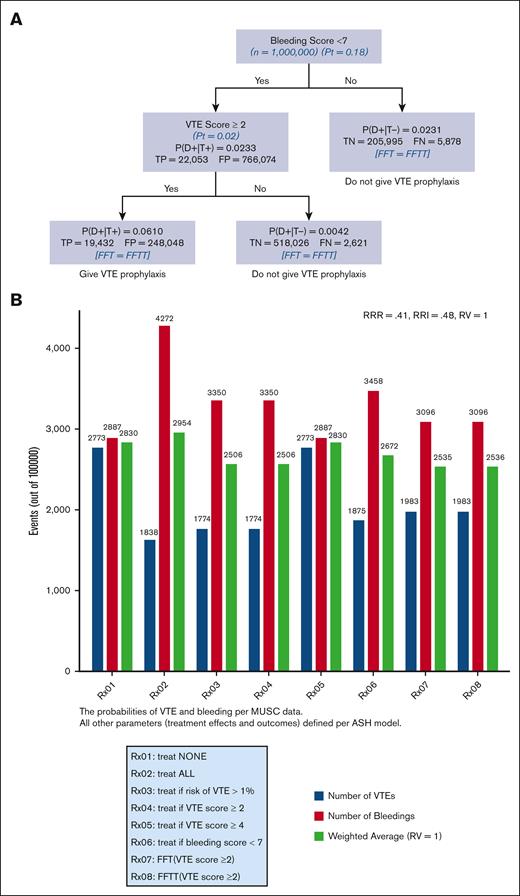

Figure 6 shows the analysis according to the “learning health system”41 paradigm, the application of the best research evidence to local data. In our case, this consisted of using MUSC bleeding and VTE risk scores ≥2 while using ASH guidelines as a source of the most reliable (“best”) evidence.6,7 The results agree with the analysis based on the “best evidence” shown in Figure 5, with a slightly higher overall classification accuracy of 74% and positive predictive value of 6%, while retaining a higher than 99% negative predictive value. Supplemental Appendix 6 shows the results of the sensitivity analysis using the RV.

FFT and FFTT decision trees analysis according to the “learning health system” paradigm, application of the best research evidence to local data. Here, it consisted of using MUSC bleeding and VTE risk scores ≥2 while using ASH guidelines as a source of the most reliable (“best”) evidence (A). The strategy with the lowest weighted average is considered the best (B). P(D+|T+)− probability of VTE if the test is “positive” that is, IMPROVE score exceeds given score (= PPV); P(D+|T−)− probability of VTE if the test is “negative” that is, IMPROVE score <given score.

FFT and FFTT decision trees analysis according to the “learning health system” paradigm, application of the best research evidence to local data. Here, it consisted of using MUSC bleeding and VTE risk scores ≥2 while using ASH guidelines as a source of the most reliable (“best”) evidence (A). The strategy with the lowest weighted average is considered the best (B). P(D+|T+)− probability of VTE if the test is “positive” that is, IMPROVE score exceeds given score (= PPV); P(D+|T−)− probability of VTE if the test is “negative” that is, IMPROVE score <given score.

Supplemental Appendix Figure 7 shows the FFT and FFTT decision tree using real-world MUSC data for VTE, bleeding risks, and treatment data. As shown in Figure 3 and supplemental Appendix 3, in our data set, we detected a significant effect of VTE prophylaxis on bleeding but not VTE outcomes. This resulted in unrealistic estimates of RRR of 5.8% and RRI of 19.7%. The results show that, as expected, when treatment is ineffective but results in more harm, the "no treatment" strategy represents the best option. However, assuming ineffective treatment disagrees with our current understanding of the best evidence (see “Discussion”). Note that if RV = 0.75, FFT-driven VTE prophylaxis after avoiding prophylaxis in patients at a high risk of bleeding represents the best management option, even if assuming such low effectiveness of treatment (supplemental Appendix 7).

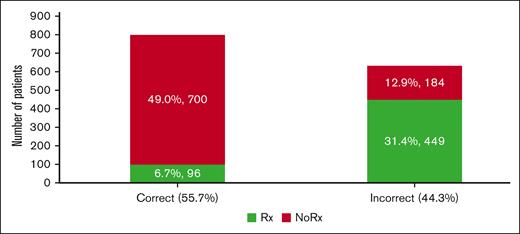

Figure 1 shows that we can define unnecessary VTE prophylaxis as (overuse) = VTE prophylaxis given for bleeding score ≥7 or VTE score <2; failure to administer VTE prophylaxis (underuse) = VTE prophylaxis not given if VTE score ≥2 and bleeding score <7. By applying this FFT(T) within the "learning health system" paradigm, we can calculate that ∼44% of inappropriate VTE prophylaxis (of which 13% and 31% consist of underuse and overuse, respectively) could be avoided (Figure 7) at annual savings due to avoiding resource waste at ∼$5 million per our patient cohort (supplemental Appendix Figure 8).

Estimates of the proportion of correctly and incorrectly administered VTE prophylaxis. Of the 44% of inappropriate VTE prophylaxis, 13% and 31% consisted of underuse and overuse, respectively (see also supplemental Appendix 7, related to estimates of costs of the inappropriate VTE prophylaxis).

Estimates of the proportion of correctly and incorrectly administered VTE prophylaxis. Of the 44% of inappropriate VTE prophylaxis, 13% and 31% consisted of underuse and overuse, respectively (see also supplemental Appendix 7, related to estimates of costs of the inappropriate VTE prophylaxis).

Discussion

Current hospital VTE prophylaxis for medical patients is suboptimal. It has historically been characterized by underuse but increasingly by overuse, with >85% of patients receiving inappropriate VTE prophylaxis in some studies.4 As a result, calls have been made to “deimplement” VTE prophylaxis at low-risk for VTE and high-bleeding risk patients.5 To minimize both underuse and overuse, the ASH guidelines on VTE prophylaxis state that when “clinicians and health care systems use ASH VTE guidelines, they should integrate VTE and bleeding risk assessments into clinical decision-making processes.”6 However, the ASH guidelines also recommend that RAM-based decision-making needs further clinical outcomes impact evaluation.6 In this article, we showed that the application of RAMs can lead to the development of decision support that is superior to other alternative approaches to VTE prophylaxis. We accomplished this by converting IMPROVE VTE and bleeding risk models8,9 into FFT(T) decision trees to enable the quantification and impact assessment of competing clinical VTE prophylaxis strategies.17-21,47 FFT draws on its theoretical robustness by relating it to signal detection theory, evidence accumulation theory, and the threshold model to help improve decision making.17-21,47 Importantly, we observed a meaningful impact within a “learning health systems”41 framework, aiming to bridge the gaps between efficacy data (the best evidence observed in ideal research settings) and real-world case-mix data that often do not reflect controlled research settings.39,40 In doing so, we demonstrated that ∼45% of inappropriate VTE prophylaxis, amounting to ∼$5 million in resource waste annually, could be avoided (Figure 7; supplemental Appendix 8).

Our study adds to a series of other studies that have externally validated the IMPROVE bleeding scores in various hospital settings,13-15 testifying to the utility of the IMPROVE model. Unfortunately, unlike other studies,10,12,13 we could not externally validate the IMPROVE VTE risk model. This is likely because our sample size was small (n = 1849, Figure 3B) as opposed to the 15 156 patients enrolled in the original study.8 Another reason, as explained earlier, is that our population differed from the original IMPROVE cohort in the number of transfers to the intensive care unit during index hospitalization (30.2% vs 4.89%).13 Finally, we did not collect data on 1 of the IMPROVE predictors (immobility >7 days) (Tables 1 and 2). Despite this, we could internally validate the IMPROVE VTE score and obtain almost identical risk scores, as reported in the original IMPROVE VTE article (Table 3). Thus, IMPROVE predictors appear to have been reproducibly validated for their prognostic value across various settings. In addition, the important evidence regarding the validity of the IMPROVE model came from a recent cluster randomized trial that demonstrated that when a somewhat modified IMPROVE -D-Dimer model (IMPROVE-DD) was embedded in an electronic health record (EHR) clinical decision support system, appropriate thromboprophylaxis significantly increased, yielding a reduction in VTE events with no increase in bleeding rates.48

Based on our multidimensional modeling using the “best evidence approach,” “learning health system paradigm,” and “real-world data analysis,” we endorse IMPROVE RAMs as the optimal approach to VTE hospital prophylaxis. The first 2 approaches (Figures 5 and 6) unequivocally demonstrated the superiority of the FFT(T) approach, as shown in Figure 1, for hospital VTE prophylaxis. However, analysis using only MUSC data (supplemental Appendix 7) suggests that VTE prophylaxis should be offered to all patients, regardless of their VTE risk score. This recommendation appears to contradict the impact analysis, which indicates that the optimal strategy might be to “administer prophylaxis to none,” thus questioning the necessity of VTE prophylaxis. We suspect that the discrepancy between the FFT and FFTT classification results stems from the small sample size, yielding unrealistic estimates of the zero-threshold probability at the second FFT cue. To observe a 40% reduction in VTE from our baseline of 2.2% (as detailed in Tables 1 and 2), following the ASH guidelines,6 we estimated a requirement to enroll at least 3104 patients. Consequently, this led to pharmacologically implausible results in our calculated treatment effects.

Another limitation is that our study is based on retrospective and modeling analyses. Despite our independent verifications of ICD10 codes, a prospective study implementing the proposed decision support in EHR at the point of care is needed to assess the full impact of the proposed management for VTE prophylaxis.

Our analysis, which has relied on a strong theoretical framework, demonstrates that the FFT(T)-based decision tree (Figure 1) informed by IMPROVE VTE score ≥ 2 or ≥ 4 and bleeding score ≤ 7 offers the best approach to hospital prophylaxis. It is important to place this conclusion in the correct perspective: a claim here is not that IMPROVE models are perfect (none of the RAMs are) but that relying on the RAMs provides the opportunity for much better management than other default strategies (such as to offer VTE prophylaxis to all medically hospitalized patients without contraindication vs offering it to none or even relying on health providers' gestalt for VTE prophylaxis).

In conclusion, our analysis, grounded in a robust theoretical framework, revealed that the decision tree based on FFT(T) (Figure 1), guided by an IMPROVE VTE score of ≥2 or ≥4 and a bleeding score of ≤ 7, represents the optimal approach to hospital prophylaxis. Following Spyropoulos et al,48 we call for embedding the IMPROVE-based FFT(T) into EHR at the point of care to realize the full impact of VTE prophylaxis. Ideally, further implementation of FFT(T) decision support should be accompanied by prospective studies, including multi-institutional cost-effectiveness pragmatic randomized trials, to best ascertain the broader socioeconomic values of the proposed approach to VTE hospital prophylaxis.

Acknowledgment

This project is conducted within the purview of the Hematology Stewardship Program at the Medical University of South Carolina, Charleston, SC.

Authorship

Contribution: B.D. conceived the project, performed analysis, and wrote the first draft; S.A. and F.K. verified the data; N.M. and S.M. identified eligible patients using ICD10 codes; A.B., S.K., and D.B.S. provided organizational and administrative support; K.Y. provided content-related input; I.H. created statistical codes for the analysis; and all authors approved the manuscript.

Conflict-of-interest disclosure: The authors declare no competing financial interests.

Correspondence: Benjamin Djulbegovic, Division of Hematology & Oncology, Department of Medicine, Medical University of South Carolina, 39 Sabin St, MSC 635, Charleston, SC 29425; email: djulbegov@musc.edu.

References

Author notes

Data are available on request from the corresponding author, Benjamin Djulbegovic (djulbegov@musc.edu).

The full-text version of this article contains a data supplement.