TO THE EDITOR:

"No man can judge what is good evidence on any particular subject, unless he knows that subject well"George Eliot, "Middlemarch"

Clinical practice guidelines are an important tool for improving health care decision-making and outcomes.1 In an unpublished 2021 survey of 454 practicing hematologists and hematopathologists conducted for the American Society of Hematology (ASH), 91% of respondents indicated that guideline panels should comprise individuals reputed to be experts. Thus, the successful production of guidelines, and trust in the finished product, depends on recruiting panelists with a variety of expertise.2-7 The optimal method of identifying the experts to serve on guideline panels, however, is not established.

There is no agreed-upon method to identify individuals with expertise. Theories of medical expertise typically consider that expertise requires the successful integration of content-based basic scientific and clinical knowledge essential for clinical problem-solving.8-10 This integration is challenging to measure, and experts have instead been identified using criteria that judge activities and experiences that can lead to the development of expertise. Among these experiences is the belief that someone who has spent many hours in deliberative practice, popularly referred to as the “10 000 hours rule,” can attain the status of an expert.11,12 However, prior work has indicated that experience itself is a poor surrogate marker because the number of years in practice does not reliably correlate with favorable clinical outcomes.13 Other surrogates, such as reputation (a reflection of popularity), education (minimally expected level of competence), or title (titles remain even if our skills deteriorate) are unreliable markers of expertise.14,15

Theories of medical expertise indicate that both content and methodological expertise should be incorporated into guideline panels. Domain knowledge and experience are generally viewed as necessary components of expertise but are not deemed sufficient to attain the status of expert.7 In addition to content knowledge, the methodological skills required to generate trustworthy guidelines include competence in using evidence-based medicine (EBM) principles1 advanced through systems of rating the certainty of evidence and strength of recommendations.16

According to Weiss and Shanteau, the cornerstone of expertise is judgmental competence.14,17-20 This assumes that the judgments of panelists with varying background expertise are expected to result in complementary perspectives. Paired with EBM principles, these perspectives should foster the development of robust guidelines. The judgments of content experts, however, have been challenged as an obstacle to developing trustworthy guidelines as a conflict of interest(s) as well as the vested interests of professional societies can result in inherent bias among some panelists.1,21,22 As a result, some have suggested that the guidelines should be developed only by panelists who are methodological experts, which would eliminate judgments on content entirely.1,21,22 However, this suggestion risks stripping the panels of critical content knowledge required to contextualize guideline recommendations and make them useful to practitioners and the public. It also raises the risk of providing inaccurate recommendations, as methodologists who lack clinical understanding are prone to make faulty judgments.16,21-28

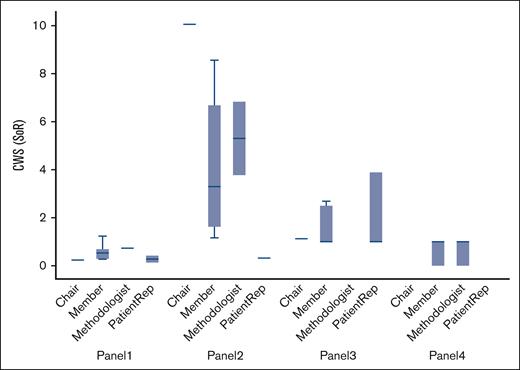

Over several decades, objective methods to define expertise have been developed. Weiss and Shanteau have argued that given the vagaries of traditional methods of identifying experts, expertise needs to be assessed empirically. This proposed empirical measurement, in the absence of a gold standard, has been dubbed the CWS (Cochran, Weiss, and Shanteau) index (Figure 1).14,17-20 The CWS index represents a continuum, and its assessment can only be done when experts are compared with each other. This makes it infeasible to apply it at the inception of the development of guideline panels and suggests that expertise cannot be identified a priori.

Comparison of CWS index across 4 different guidelines panels. Use of the CWS index to measure the expertise in guidelines panels. CWS is defined as a ratio between discrimination (which refers to the expert's differential evaluation of the various stimuli within a given set) and consistency (which refers to the judge's evaluation of the same stimuli over time or inconsistency, which is used in the formula to represent its complement). Higher the CWS ratio, the greater the expert's performance.14,17-20 This means that expertise is relative to one's peers. It is, therefore, challenging, if not impossible, to identify individuals in advance of a guideline panel without a "ground truth" or gold standard to compare these potential experts' knowledge.14,17-20 The participants were asked to make their judgments about the strength of recommendations (SoR) before vs immediately after the meeting deliberation. Although the CWS index for panel 2 was statistically significantly higher than other panels (P = .019), no statistically significant difference was detected in the judgments among the panelists’ members according to their role on the panel. (The analysis was performed using a linear mixed-effect model to control for judgments among panelists clustered within the guidelines panels). n = 45, based on unpublished data using ASH and other society guidelines. The figure shows a large variation in judgments among panel members making an argument for using the CWS index to identify the best people to serve on the panel. The results also demonstrate that judgments among the panels significantly differ as though they have relied on different types of knowledge.2,3 However, in this analysis, no difference was detected in judgments among the panelists’ members according to their role on the panel, possibly because of the small number of panelists (n = 45). The limitations of this system include the absence of an absolute value or cut-off, above which we label someone as an expert. In addition, as noted in the main text, the system cannot be applied a priori, making its practical application difficult.

Comparison of CWS index across 4 different guidelines panels. Use of the CWS index to measure the expertise in guidelines panels. CWS is defined as a ratio between discrimination (which refers to the expert's differential evaluation of the various stimuli within a given set) and consistency (which refers to the judge's evaluation of the same stimuli over time or inconsistency, which is used in the formula to represent its complement). Higher the CWS ratio, the greater the expert's performance.14,17-20 This means that expertise is relative to one's peers. It is, therefore, challenging, if not impossible, to identify individuals in advance of a guideline panel without a "ground truth" or gold standard to compare these potential experts' knowledge.14,17-20 The participants were asked to make their judgments about the strength of recommendations (SoR) before vs immediately after the meeting deliberation. Although the CWS index for panel 2 was statistically significantly higher than other panels (P = .019), no statistically significant difference was detected in the judgments among the panelists’ members according to their role on the panel. (The analysis was performed using a linear mixed-effect model to control for judgments among panelists clustered within the guidelines panels). n = 45, based on unpublished data using ASH and other society guidelines. The figure shows a large variation in judgments among panel members making an argument for using the CWS index to identify the best people to serve on the panel. The results also demonstrate that judgments among the panels significantly differ as though they have relied on different types of knowledge.2,3 However, in this analysis, no difference was detected in judgments among the panelists’ members according to their role on the panel, possibly because of the small number of panelists (n = 45). The limitations of this system include the absence of an absolute value or cut-off, above which we label someone as an expert. In addition, as noted in the main text, the system cannot be applied a priori, making its practical application difficult.

Identifying expert panelists for clinical practice guideline panels

This brief review of theoretical and empirical knowledge on expertise has the following implications for selecting individuals to serve on guidelines panels:

Firstly, there is no universally accepted definition of expertise and no well-defined, validated approach for the selection of guideline panelists.7,14 As a result, it is usual practice to let those in a domain define that domain’s experts.7 Narrow measures of physician experience (ie, publications, tenure, and career stage) may be used to identify panelists; however, this should be done with the recognition that it is an imperfect practice. Reducing reliance on these proxy measures would allow for a broader array of individuals to participate in guideline development, enhancing panel diversity while adhering to methodological EBM approaches. As domain knowledge and relevant experience are required but not sufficient for expertise, it is usual practice that persons with adequate training and reputations are nominated as panelists.

Secondly, expertise is not universal: the same individuals may display expert competence in some settings and not in others. Expert competence depends on the task characteristics.14,17-20 For example, acute leukemia specialists with advanced knowledge in that discipline would not be considered to serve on nonleukemia panels. Having providers from different disciplines (eg, hematologists, hematopathologists, and palliative care providers serving on a leukemia panel) is expected to provide complementary perspectives and enhance the quality of the guidelines. Correctly matching an individual's task expertise with the scope of the guideline panel is a critical component of panel development.

Thirdly, the experts display various psychological and cognitive strategies to support their decisions. These are difficult to measure but generally include the use of dynamic feedback, reliance on decision aids, including a summary of evidence generated by systematic reviews, a tendency to decompose complex decision problems, and revisiting solutions to the problems at hand.7 Most importantly, when a task displays suitable characteristics, the experts usually exhibit accurate and reliable judgments. Such tasks are characterized by being similar, stable, and predictive or repetitive over time; they often can be clearly articulated, decomposed, and are suitable for objective analysis or can be solved by using decision aids.7 An “unaided expert may be an oxymoron since competent experts will adopt whatever aids are needed to assist their decision making.”7

Role of chair and cochair persons

The roles of the guideline chair and cochair persons are essential to the success of guideline development. Research on guideline panels' decision-making demonstrates that the chair/cochairs significantly guide the conversation during meetings. In a survey of voting members from guideline panels, the chairs and cochairs numerically composed <10% of the panel members yet accounted for >50% of the discussion.29 A separate analysis reported similar results with the chair, cochair, and methodologist initiating and receiving >50% of all communication, with 42% of communication occurring between these individuals.29

Role of empirical evidence

The use of systematic reviews, evidence tables, and/or decision aids is a prerequisite for developing evidence-based guidelines, thus creating an environment favorable for exercising accurate and reliable expertise both at a domain and methodological level. Indeed, empirical data show that expert panelists exhibit important features of high-ability participants, which is to follow instructions that require cognitive effort and suppress the influence of other factors and prior beliefs, although nonreproducible and biased judgments can still occur.30 The latter may be because most panelists receive little training in EBM or Grading of Recommendations Assessment, Development, and Evaluation practices, relying on chairs and cochairs (who are typically methodologists) to guide the process. An unfortunate consequence of this, as outlined earlier, is that these individuals dominate the process.

Practical approach to selecting panelists and ASH’s process

We can attempt to identify individuals to serve on guidelines panels by adhering to the theoretical considerations of expertise, particularly domain knowledge and relevant experience. For organizations such as ASH, we can expect that large numbers of people would meet these criteria. How, though, should panelists be selected from a large pool of qualified candidates? At present, most panelists are selected using the word-of-mouth approach. Solicitation of public nominations assures a diverse panel of individuals who meet conflict of interest criteria and wish to serve on the panels. This approach is pragmatic and efficient, yet it risks the exclusion of less prominent content experts and reduces diversity, equity, and inclusion. For these reasons, it is suboptimal.

When an empirical or evidence-based approach is not possible, a transparent and explicit process may provide trust and increase confidence in the panel selection process. For example, noticing the influential role of the chairs and cochairs in the development of guidelines, the UK National Institute for Health and Care Excellence publicly advertises the position for a chair and other applicants with a detailed job/person profile.4 The chair's responsibility to serve as the chair of the committee rather than a purveyor of a topic or methodological knowledge should be emphasized. All professional organizations can adopt this approach and ask their members to nominate (or, self-nominate) a chair and participants from an eligible pool of members.

We can also endorse good practices from the survey research to help us select qualified individuals fairly and without bias. Many societies keep directories that include the members' areas of expertise. Using a lottery to choose guideline panelists from the eligible membership pool represents a transparent mechanism for ensuring fairness in the process.31 For example, we can use a directory to identify the target population from which panelists will be drawn (eg, all acute myeloid leukemia experts who are ASH members). Next, we identify the sampling frame (ie, adult acute myeloid leukemia experts who are ASH members potentially enriched with additional experts who may not be ASH members). Finally, we use randomization techniques to generate an unbiased selection of a final sample of 10 or 20 individuals to be invited to serve on the panel. Randomization can be stratified to ensure that panels are diverse and representative. The assumption, here, is that by focusing on a fair process, we will also be able to identify competent individuals who are hypothesized to exist among members of a professional society.

Recognizing that trusted experts are required and that a well-established method for identifying these individuals does not exist, the ASH Committee on Quality forms an ad hoc group to oversee the development of new guideline panels. A public recruitment process can be used to assure the generation of a large, diverse pool of candidates who will then be vetted to assess the interest, availability, and eligibility to serve on the panel. Through an iterative process, a final panel of ∼25 individuals can be developed and sent to the ASH Committee on Quality for approval.

Guideline developers and end users place a high value on the panelist’s expertise; however, it can be challenging to determine whether actual panelists are genuine experts or not. Expertise cannot be identified a priori. However, proxy markers of productivity, reputation, and experience, in combination with a transparent and explicit guideline development process, can make it more likely that individuals in guideline panels are truly experts, thus, increasing the likelihood that their recommendations are robust and trustworthy.

Acknowledgments: This work was supported in part by grant R01HS024917 from the Agency for Healthcare Research and Quality (B.D.).

The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Contribution: M.B., R.M., R.K., D.S., and B.D. developed the initial draft; M.B. and B.D. wrote the manuscript; and all authors reviewed, edited, and approved the final version.

Conflict-of-interest disclosure: The authors declare no competing financial interests.

Correspondence: Benjamin Djulbegovic, Division of Hematology/Oncology, Department of Medicine, Medical University of South Carolina, 39 Sabin St MSC 635, Charleston, SC 29425; e-mail: djulbegov@musc.edu.

References

Author notes

Data are available on request from the corresponding author, Benjamin Djulbegovic (djulbegov@musc.edu).