In this issue of Blood, Hod et al1 present results of the Donor Iron Deficiency Study (DIDS), a randomized controlled trial that demonstrates that iron repletion in iron-deficient blood donors fails to improve posttransfusion recovery of a subsequently donated red blood cell (RBC) unit or the quality of life and cognitive performance of the blood donor.

RBC transfusion represents the most common therapeutic intervention in hospitalized patients. However, unlike the vast majority of patient interventions, transfused RBCs are a biologic directly harvested from altruistic donors. The majority of blood donor eligibility considerations, including questionnaires and infectious disease testing, are designed to ensure the safety of the transfused recipient. However, in addition to the time and resources required of blood donors, blood donation itself is not without risk. Most complications are transient (eg, vasovagal reactions) and have little, if any, long-term sequelae. However, a substantial percentage of repeat blood donors develop iron deficiency. Despite this, the effects of donor iron deficiency on the quality of the donated unit and the overall health of the donor remain incompletely understood.

First described more than 80 years ago,2,3 the development of iron deficiency among blood donors results from differences between rates of iron loss from blood donation and iron repletion from a regular postdonation diet. Donation of a single whole blood unit results in the loss of 200 to 250 mg of iron. Without supplemental iron, dietary iron intake typically requires >170 days to replace iron lost during donation,4 significantly longer than the minimum 56 days between whole blood donations mandated in the United States. In addition, other causes of chronic blood loss (eg, menstruation), reduced intake of bioavailable iron (eg, dietary choices), or reduced iron absorption (eg, use of acid blocking agents) can further increase iron deficits in blood donors.5 As a result, substantial effort has been taken to define the scope and clinical implications of iron deficiency in blood donors.5

Although iron-deficient individuals with hematocrit levels below the requirements for donation are deferred, up to 35% of individuals with acceptable hematocrit levels for donation may have ferritin levels indicative of iron deficiency. Given the nonanemic sequelae of iron deficiency, most notably impaired neurological function,6 expert opinion–based policy has recommended that donation facilities “monitor, limit, or prevent iron deficiency in blood donors.”7 In this context, the Hemoglobin and Iron Recovery Study demonstrated that iron supplementation can increase the rate of hemoglobin and ferritin recovery after donation of a single whole blood unit.4 However, a recent placebo-controlled trial in iron-deficient blood donors showed that although iron repletion increased hemoglobin, no changes in self-reported fatigue or general well-being were noted,8 suggesting that the benefits of iron supplementation on overall donor health may be minimal.

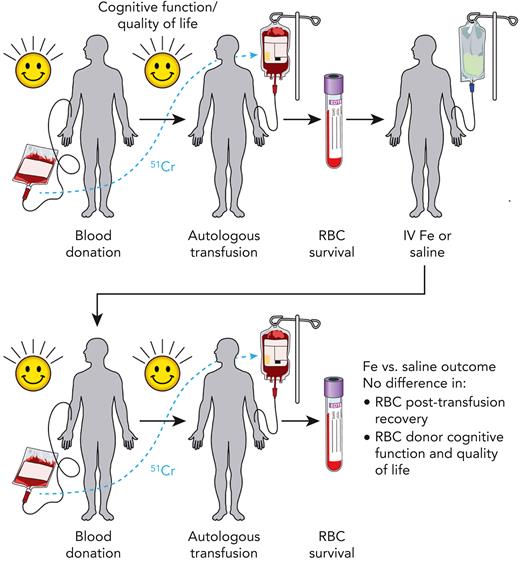

Before the DIDS, 2 key questions regarding blood donor iron deficiency remained: (1) Does iron repletion of iron-deficient blood donors improve the quality of the subsequently donated RBC product as measured by posttransfusion recovery? (2) Does iron repletion of iron-deficient blood donors improve donor cognitive performance? To address these questions, Hod et al enrolled 79 frequent blood donors who were iron deficient and eligible to donate blood (see figure). Among these 79 study participants, 54 were women (68%) and 25 were men (32%), consistent with the sex-biased prevalence of iron deficiency among blood donors.1

Study schema of the DIDS. Frequent blood donors who were iron (Fe) deficient (defined as ferritin <15 μg/L and zinc protoporphyrin >60 μMol/mol heme) but eligible for blood donation were recruited. Each participant donated an RBC unit, which was stored for 40 to 42 days, labeled with chromium-51 (51Cr), autologously transfused, and then assessed for 24-hour posttransfusion recovery. These individuals were then randomized to receive intravenous (IV) saline or iron. Approximately 145 days (129-162 days, with some pandemic-related exceptions) after randomization, participants donated a second RBC unit, which was likewise stored for 40 to 42 days, labeled with 51Cr, autologously transfused, and assessed for 24-hour posttransfusion recovery. Assessment of quality of life (via the RAND Health Survey) and cognitive function (via the Cognition Fluid Composite Score) was done before each blood donation and measurement of 24-hour posttransfusion recovery. Although iron repletion successfully corrected iron deficiency, on average, RBC units donated from iron-repleted individuals failed to display any difference in 24-hour posttransfusion recovery when compared with saline-treated controls. Secondary analyses, including quality of life and cognitive function, likewise found no difference between iron-repleted and saline-treated blood donor participants.

Study schema of the DIDS. Frequent blood donors who were iron (Fe) deficient (defined as ferritin <15 μg/L and zinc protoporphyrin >60 μMol/mol heme) but eligible for blood donation were recruited. Each participant donated an RBC unit, which was stored for 40 to 42 days, labeled with chromium-51 (51Cr), autologously transfused, and then assessed for 24-hour posttransfusion recovery. These individuals were then randomized to receive intravenous (IV) saline or iron. Approximately 145 days (129-162 days, with some pandemic-related exceptions) after randomization, participants donated a second RBC unit, which was likewise stored for 40 to 42 days, labeled with 51Cr, autologously transfused, and assessed for 24-hour posttransfusion recovery. Assessment of quality of life (via the RAND Health Survey) and cognitive function (via the Cognition Fluid Composite Score) was done before each blood donation and measurement of 24-hour posttransfusion recovery. Although iron repletion successfully corrected iron deficiency, on average, RBC units donated from iron-repleted individuals failed to display any difference in 24-hour posttransfusion recovery when compared with saline-treated controls. Secondary analyses, including quality of life and cognitive function, likewise found no difference between iron-repleted and saline-treated blood donor participants.

The study used a trial design that enabled within-subject measurements before and after intravenous iron or saline. Study subjects first received an autologous transfusion of chromium-51 radiolabeled 42-day-old RBCs, followed by quantitation of radiolabeled cells in circulation 24 hours posttransfusion; according to US Food and Drug Administration regulations, >75% of radiolabeled RBCs must be detectable 24 hours posttransfusion. Within 30 days of this first measurement of posttransfusion RBC recovery, subjects were randomized to receive 1 g intravenous iron or saline. Approximately 5 months after randomization, a second autologous collection and transfusion of radiolabeled RBCs was performed, followed by a second measurement of 24-hour posttransfusion RBC recovery. Within-subject change in posttransfusion RBC recovery between the first and second transfusions was the study’s primary outcome. In addition, laboratory markers of iron status and standardized assessments of cognitive function and quality of life were performed.

Blood donors in the iron repletion group showed increased hemoglobin, ferritin, and hepcidin levels and decreased zinc protoporphyrin over time. The hemoglobin concentration in RBC units collected from donors in the iron repletion group was also higher compared with donors in the saline group. Comparing the first and second autologous transfusions among all study subjects, there was no significant difference in 24-hour posttransfusion RBC recovery between the iron repletion and saline groups. Assessment of quality of life and cognitive function also did not reveal any significant differences between donors in the iron and saline groups.

Altogether, the DIDS randomized controlled trial suggests that, on average, correction of iron deficiency in frequent blood donors does not significantly affect posttransfusion RBC recovery or donor cognitive function. Nonetheless, it remains unclear if there are specific subsets of donors for whom iron repletion might improve blood product quality or cognition. Indeed, female subjects, but not male subjects, who received iron repletion showed a statistically significant increase in posttransfusion RBC recovery. Moreover, the study excluded individuals 16 to 18 years old, who comprise >10% of donors in the United States and are at higher risk of developing iron deficiency and related complications.9,10 Such individuals may be particularly vulnerable to iron deficiency due to ongoing neurological development where the consequences of iron deficiency may not be acutely manifest, but instead become apparent at much later time points postdonation. Identification of individuals at highest risk of morbidity due to iron deficiency could help guide donor-specific recommendations for iron repletion, changes in the eligible age range, and alterations in donation frequency. Although these additional questions remain, the present results provide important guidance on the impact of iron replacement for iron-deficient blood donors and suggest that the overall quality of the subsequently donated unit and the cognitive performance of the donor remain largely unaffected by iron replacement.

Conflict-of-interest disclosure: S.R.S. is a consultant for Alexion, Novartis, Cellics, and Argenx, and receives honoraria for speaking engagements for Grifols. R.B. declares no competing financial interests.

This feature is available to Subscribers Only

Sign In or Create an Account Close Modal