Abstract

High relapse rate has always been the primary element threatening the long-term survival of acute lymphoblastic leukemia (ALL). The risk stratification depending on conventional prognostic factors indeed assists to detect patients with high risk of relapse, but not efficiently. The detection of minimal residual disease (MRD) has been widely used to further identify patients with poor prognosis. Lots of studies focused at pediatric ALL had demonstrated the prognostic effect of MRD levels for predicting relapse risk and survival in children, but the data and evidences seemed not that sufficient in adult ALL. And it was still unknown to what extent of the MRD levels at remission that the allogenic hematopoietic stem cell transplantation (allo-HSCT) should be implemented. This study was designed to evaluate the prognostic effect of MRD levels at time of CR1 and transplant for adult Philadelphia chromosome-negative ALL (Ph-negative ALL), and to discover the subgroup that benefits from HSCT based on the stratification by the MRD levels at CR1.

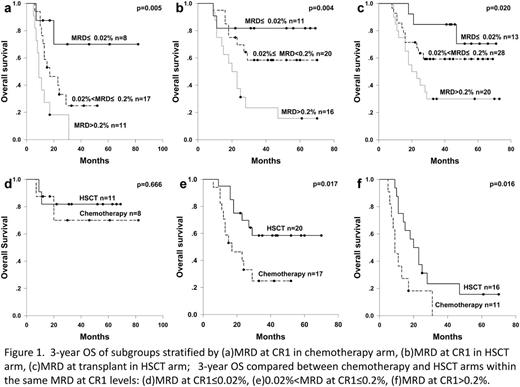

During January 2006 to December 2011, 107 adult Ph-negative ALL patients in our center were admitted in this study, of whom 45 were assigned chemotherapy and 62 allo-HSCT. All the patients received induction mainly composed of vincristine and corticosteroids (VDP, VDCP, VDLP, VDCLP), and consolidation of VDCP, VMCP, hyper-CVAD etc. For patients allocated allo-HSCT, conditioning regimen mainly involved BuCy without total body irradiation, and the grafts were from alternative donors—15 HLA-matched sibling donors (24.2%), 27 unrelated donors (43.5%), 20 haploidentical donors (32.3%). The bone marrow samples were collected for MRD detection by 4 color flow cytometry. The time point for MRD detection in chemotherapy arm was the first morphologic remission, defined as MRD at CR1; and the HSCT arm added MRD at transplant (the MRD levels just before HSCT) in addition. As some samples lost in the final statistical analysis, there were totally 36 MRD at CR1 samples in chemotherapy arm, 47 MRD at CR1 and 61 MRD at transplant samples in HSCT arm. Comparing and analyzing the differences of survival among risk groups stratified by MRD levels with the cut-off of 0.02% and 0.2%. Further, within the same MRD at CR1 levels, the differences of survival between chemotherapy and HSCT arms were examined to discover the subgroups benefited from allo-HSCT.

The HSCT arm had a 3-year improved OS and DFS than chemotherapy arm (55.7% vs. 27.9%, P=0.002; 54.4% vs. 18.2%, P<0.001). The relapse rate was significantly lower in the HSCT arm (29.9% vs. 76.5%, P<0.001). In the chemotherapy arm, the 3-year OS for MRD at CR1≤0.02%, 0.02%<MRD at CR1≤0.2%, MRD at CR1>0.2% were 70.0%, 24.8%, 0% (P=0.005, Figure 1a); In the HSCT arm, the 3-year OS for MRD at CR1≤0.02%, 0.02%<MRD at CR1≤0.2%, MRD at CR1>0.2% were 81.8%, 58.4%, 23.4% (P=0.004, Figure 1b); and the 3-year OS for MRD at transplant≤0.02%, 0.02%<MRD at transplant≤0.2%, MRD at transplant>0.2% were 84.6%, 59.3%, 30.0% (P=0.020, Figure 1c). Then, the 3-year improved OS with HSCT was observed in the MRD at CR1>0.02% subgroup (0.02%<MRD at CR1≤0.2%, HSCT 58.4% vs. chemotherapy 24.8%, P=0.017, Figure 1e; MRD at CR1>0.2%, HSCT 23.4% vs. chemotherapy 0%, P=0.016, Figure 1f), but not in the MRD at CR1≤0.02% subgroup (HSCT 81.8% vs. chemotherapy 70.0%, P=0.666, Figure 1d). Multivariate Cox analysis confirmed that higher levels of MRD at CR1 and MRD at transplant were the most statistically significant adverse factors for OS.

MRD levels at time of CR1 and transplant can strongly predict the outcome of adult Ph-negative ALL; the subgroup whose MRD at CR1>0.02% benefits from allo-HSCT.

No relevant conflicts of interest to declare.

Author notes

Asterisk with author names denotes non-ASH members.

This feature is available to Subscribers Only

Sign In or Create an Account Close Modal